The Logic of Causal Conclusions: How we know that fire burns, fertilizer helps plants grow, and vaccines prevent disease

I usually cringe when I read a comment by a skeptic arguing that “correlation does not prove causation”. Of course, it’s true that correlation does not prove causation. It’s even true that correlation does not always imply causation. There are many great examples of spurious correlations which demonstrate clearly just how silly it is to extrapolate cause from correlation. And the problem is not trivial. Headlines in popular press articles alone can be very damaging as most people simply accept them as true.

BUT…

I cringe because I am afraid that this oversimplification leads people to think that correlation plays no role in causal inference (inferring that X causes Y). It does. In fact, it plays a very important role that skeptics should be just as aware of as the sound bite “correlation does not imply causation”. And that is that causation cannot be logically inferred in the absence of a correlation.

What’s more, that sound bite does nothing to educate people about how and when we should infer cause. So let’s take a look at both problems.

Causation From Correlation

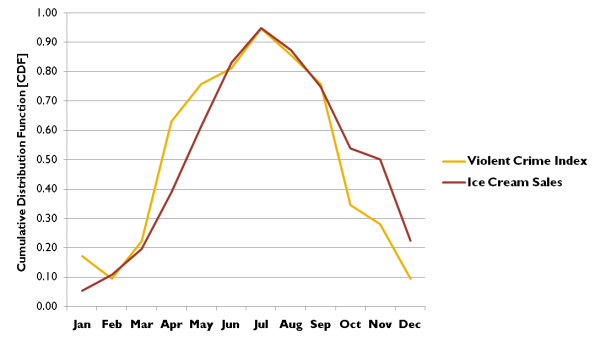

A classic example used to illustrate the problem is the very real relationship between ice cream sales and violent crime. As you can see, when sales of ice cream go up, violent crime increases.

So, should we stop selling ice cream? Of course not.

There are basically two problems with drawing causal conclusions from a correlation:

- There may very well be a causal relationship, but the causal arrow is unclear. For example, it could be that eating ice cream makes people violent (“sugar high” is a myth, but perhaps it’s milk allergies?). Or, it could be that people get hungry after they’ve just held up a liquor store.

- There is another variable involved. Most people eventually realize that the correlation of ice cream sales and violent crime is spurious. In other words, it is the result of a common cause–temperature. People are much more likely to eat ice cream in the summer, when it’s warm outside, and they are much more likely to commit violent crimes for various reasons.

Causes

So if correlation doesn’t prove causation, what does?

Well, nothing does. We can’t “prove” and that’s not really what science tries to do. But that’s a point for a different day.

So when can we reasonably infer that X causes Y? It is difficult to reach the bar of causal inference, but the requirements for doing so are actually pretty simple.

First, let’s define “cause” and “effect”*:

Cause

“…that which makes any other thing, either simple idea, substance or mode, begin to be…”

Effect

“…that, which had its beginning from some other thing…”

Confused? Well, let’s simplify it a bit:

A cause is a condition under which an effect occurs.

An effect is the difference between what happened (or is) and what would have happened (or been) if the cause was not present.

I should note here that an “effect” is always a comparison of at least two things. Everything is relative; that’s often a difficult concept to wrap one’s brain around when we are talking about specific examples, but it’s important.

So, let me repeat: a cause is a condition under which an effect occurs.

Causal conditions can be:

- necessary

- sufficient

- necessary AND sufficient

- neither necessary nor sufficient

A condition is necessary if the effect cannot occur without the condition. For example:

To receive credit for a course, you must be enrolled in the course.

In this case, the condition is necessary, but not sufficient. You don’t get credit if you are not enrolled, but enrollment does not guarantee credit (you usually need a passing grade, too).

A condition is sufficient if the effect always occurs when the condition is met. For example:

Decapitation results in death (in humans, at least).

In this case, the condition is sufficient, but not necessary. Nobody can survive without their head, but death can occur in many ways.

For a condition to be both necessary and sufficient, the effect must always occur when the condition is met and it must never occur unless the condition is met. For example:

To win the lottery, you must present a ticket with the correct numbers to the appropriate authorities.

Or this:

To be a parent, you must have a child.

The last one is the tricky one. A cause can be neither necessary nor sufficient, but if it is neither, it must meet another requirement: it must be a non-redundant part of a sufficient condition. This would make it an:

Insufficient

Non-redundant

Unnecessary part of a

Sufficient condition

Or an INUS condition.

The truth is that most causes in the world are INUS conditions. In the social sciences, we deal mostly with INUS conditions.

The big question is how do we identify them?

Well, let’s look at the question of what might cause a forest fire. Some possible causes:

- A lit match tossed from a car

- A lightening strike

- A smoldering campfire

None of these are necessary conditions for a forest fire to start. Science assumes that all effects have causes, so we assume that something occurs to start a fire, but it does not need to be something described here. But all are INUS conditions.

Taking just one as an example, a lit match from a car is not necessary for a forest fire to start since fires can start a number of ways, nor is it sufficient. If tossing a lit match out a car window always ended with forest fires, we’d have a lot more forest fires. There are other conditions which must be met: the match must remain hot long enough to start combustion, there must be oxygen to feed it, and the weather and leaves must be dry enough to keep from smothering it. If those things are met, then the condition as a whole is sufficient. But it must also be non-redundant. If something else in the mix does the job of the match, then the match cannot be considered a cause. Well, oxygen alone cannot start a fire, nor can dry weather, therefore the lit match is a non-redundant part of a sufficient condition.

Another good example of an INUS condition is the presence of a condom to prevent pregnancy. The condom is not necessary to prevent pregnancy; there are many ways. The presence of the condom does not guarantee prevention (effectiveness is ~98% and efficacy even lower). Efficacy is lower than effectiveness, primarily due to compliance. In other words, you have to use the condom right to prevent pregnancy, and even then there are ways the condom can fail. However, when everything is perfect, it prevents pregnancy. Condoms are an unnecessary, insufficient, but non-redundant part of a sufficient condition in the prevention of pregnancy.

So how can we identify an INUS condition?

Causal Inference

In essence, to logically infer that X caused Y, we need to meet three requirements:

- We must know that X preceded Y. It is not possible for a cause to follow or even coincide with an effect. It must come before it, even if it is fractions of a second.

- X must covary with Y. In other words, Y must be more likely to occur when X occurs than when X does not occur.

- The relationship between X and Y is free from confounding. What this means is that no other variable also covaries with X when #1 and #2 are met.

*Definitions adapted from Shadish, Cook, & Campbell’s book “Experimental and Quasi-Experimental Designs for Generalized Causal Inference”

Let me explain each by using some examples:

EXAMPLE 1: A lit match (A) causes a forest fire (B) – YES!

- A precedes B. – MEETS

- Lit match occurs before forest fire.

- A covaries with B. – MEETS

- A forest fire is more likely to occur when there is a lit match than when there is no match.

- The relationship between A and B is free from confounding. – MEETS

- The lit match does not correlate with other factors, such as oxygen being present or leaves being dry.

- Oxygen is present whether the match is there or not.

- The leaves are dry whether the match is there or not.

Let’s look at one of the headlines I saw a few years ago on the New York Times website, claiming that good grades in high school mean better health in adulthood. Without going into detail about the study, let’s look at the criteria:

EXAMPLE 2: High grades in high school (A) cause better health in adulthood (B) – NO.

- A precedes B. – MEETS

- High school grades occur before health in adulthood is measured.

- A covaries with B. – MEETS

- Grades were positively correlated with adult health measures.

- The relationship between A and B is free from confounding. – FAILS

- On average, persons with higher high school grades have more access to resources than those with lower grades.

- On average, persons with higher high school grades are more intelligent than those with lower grades.

- On average, persons with higher high school grades are more motivated than those with lower grades.

(and probably a lot more)

All of these things are more plausible explanations for the correlation than “grades are good for your health”.

But notice that correlation is a requirement to infer cause, always. What I see all too often are detailed, lengthy explanations for things that are not correlated. An excellent example is “Lunar Fever”. I’ve seen lots of explanations for why emergency rooms and police stations are busier during a full moon, from very good (e.g., the light from the moon makes it more likely that people will be out and about) to looney (the human body is full of water, which is affected the way the tides are affected). The first explanation might be the most parsimonious one, but it’s still useless because THERE IS NO CORRELATION. Studies are pretty clear that E.R.s and police stations are not busier during a full moon than other times of the month.

EXAMPLE 3: The full moon (A) causes people to act nutty (B) – NO.

- A precedes B. – MEETS

- Behavior is measured after the full moon appears.

- A covaries with B. – FAILS

- There is no correlation between behavior and moon phases.

- The relationship between A and B is free from confounding. – PROBABLY MEETS

- There might be variables which are correlated with the full moon that have nothing to do with the moon phase, but it’s not really a relevant question if #2 isn’t met.

So how do we meet these requirements?

#1: To establish temporal precedence, we conduct experiments.

#3: We eliminate confounding variables by isolating the hypothesized cause – the only difference between one condition and another is the causal variable. To do this we need:

- a Counterfactual (equivalent comparison/placebo)

- Random assignment (explained below)

- Controls to avoid other confounds such as expectation (blind, double-blind, randomized order)

If, after measuring the hypothesized effect, the outcome establishes covariation (#2), the only explanation for that covariation is cause.

By the way, we eliminate hypothesized causes in the same manner, by setting up conditions in which the only explanation for the outcome is that A does not cause B.

EXAMPLE 4: Test the hypothesis that acupuncture (A) reduces pain (B).

- We conduct an experiment comparing acupuncture to doing nothing. In doing this, we’ve established temporal precedence because the treatment precedes the measure of pain.

- We find that when we compare the pain of controls to those who have received acupuncture, the former has more pain than the latter, establishing a correlation.

- However, are there confounding variables? Yes, there are. The reduction in pain could be caused by anything that covaries with acupuncture, including the fear of being stuck with needles and the expectation that the treatment will work.

What we have in this case is an improper counterfactual. Participants were not blind to the treatment or its expected effects. Also, the body probably releases endorphins in response to being stuck with needles, so while they might claim that acupuncture reduces pain, the explanation isn’t where or how the needles were placed.

When we change the counterfactual by comparing acupuncture to sham acupuncture, the correlation disappears. Those who receive the sham treatment are in no more pain than those who received the “real” treatment.

When no randomly-assigned, double-blind, placebo-controlled experiments are possible…

One “control” that is absolutely necessary in any experiment to eliminate confounding variables is random assignment to treatment groups. In other words, the people who receive, say, the sham treatment in the acupuncture example are assigned to that treatment by a random process (like rolling a die). We do this because if we used any criterion other than randomness to assign, that criterion could explain any differences (or lack thereof) in outcomes. For example, if I put all of the people with headache pain in one condition and all of the people with back pain in the other, the outcome could be explained by the fact that these people have different medical conditions.

This is a problem for a lot of research, especially in education and health. For example, we cannot ethically assign people to smoke, randomly or otherwise, so how can we eliminate confounding variables? People who choose to smoke are different from people who do not in many, many ways, and any of those ways can explain higher rates of cancer. But I don’t know anyone who would deny that smoking causes cancer.

So what happens when we cannot do the kinds of “true” experiments that control for all possible confounds? Do we give up?

Of course not.

In these cases, we rely on evidence converging from different approaches to the question until the odds tell us that it is highly, highly unlikely that the correlation is spurious.

When it comes to smoking causing cancer, we first establish a correlation with temporal precedence. That’s easy. Smokers are much more likely to get cancer later in life than nonsmokers. But, since we cannot eliminate all confounding variables, we must conduct many different studies, eliminating hypothesized explanations. We know, for example, that smoking causes cancer in rats (that has its own ethical problems, but it’s been done). We can’t be sure that the effects are the same in humans, but when we reconcile that with other studies in humans that control for variables such as access to health care and amount of exercise, the probability that smoking does not cause cancer is reduced. The more studies and the more hypotheses eliminated, the more likely the remaining hypothesis (that smoking causes cancer) is the correct one.

I invite you to think about how we know that vaccines do not cause autism. While we cannot (ethically) randomly assign some kids to get vaccines while others do not, the answer becomes clear when approached from many different angles.

So I hope I haven’t tied your brain in knots with this over-simplified, yet lengthy explanation of causal inference. It’s a topic near and dear to my heart as a methodologist and one that I think skeptics should get a handle on if at all possible.

[…] The Logic of Causal Conclusions: How we know that fire burns, fertilizer helps plants grow, and vacc…. […]

Hello,

Thank you for this. I teach an undergraduate methods course and I would like to use this post in my class. Please do not take it down anytime soon!

Mike

Great article!

I’m not sure of the relevance of “causal conditions”; these are descriptors of different causal items but not, as you point out, defining. Whether these conditions occur sheds no additional light on the validity of the suspected cause-effect.

In my experience the most ignored element when association is elevated to causality is the importance of any occurrence of non-association. In medical papers it is standard procedure to go from association to the ambiguous “risk factor” to causation without ever discussing evidence that failed to show association. Showing non-association is vastly more powerful than showing association. All 300 years of demonstrations of Newton’s laws of Gravity became suspect with just one orbit (Merccury) that did not show sufficient association between theory and data.

I discussed the issue of lack of association (correlation) in the piece and the bulk of the piece is not about “conditions”, but about requirements for causal inference. Did you stop reading 1/3rd of the way through?

I’m not sure what you mean by “the validity of the suspected cause-effect”; I assume you are referring to the validity of a causal claim? Although I’ve touched on validity (without naming it) here by discussing issues of confounding, it is not important to this explanation. This is necessarily a simplification of a very narrow and specific issue. I may discuss validity at a future date, although I certainly would not attempt to apply it to causal inference in a blog post. There are some very thick books written on the subject that take time and study to understand.